Devlog 2 - Animating Music

It's been a while since I haven't written any devlog. Let's fix that by talking about a fundamental aspect of Symphonies Garden: the sound animations. In Symphonies Garden, many elements use the music's data to create visual animations. Making the musical aspect of the game stand out even more.

Here I'll explain the technical implementation of these visuals. How to read audio data in Javascript (or Typescript), and how to use it in the style.

Acquiring the data

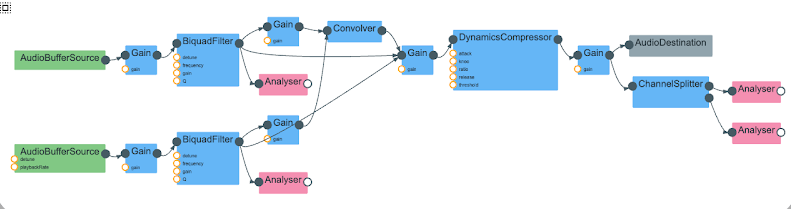

This part requires some knowledge about the Web Audio API. I won't explain it in length, but basically, modern audio in javascript works through a nodes graph. Several nodes are created and connected to each other in order to transfer the audio data, transform it, and output it to the speakers. For example a StreamPlayerNode will play a sound file, but needs to be connected to a GainNode to adjust its volume, which then can be connected to context.destination to be heard through the speakers.

There are several audio nodes that can create various effects. The one that will interest us here is the AnalyserNode, which doesn't change the data that pass through it, but allows to read it in real time. It needs a fftSize parameter (for Fast Fourier Transform), a power of 2 value that will determine the precision in which the frequencies will be read. By default, it's 2048, but for my needs I was fine with 256. Then we have to initialize a Uint8Array or a Float32Array, depending on how we want to read the data. Don't worry about the specifics, I'll explain that later. This array is the one in which the data will be written each frame.

const FFT_SIZE = 256; const analyser = context.createAnalyser(); analyser.fftSize = FFT_SIZE; const musicData = new Float32Array(analyser.fftSize);

Then the analyzer needs to be connected to a node from which the music is emitted (generally a GainNode). For Harmonies Garden, I use my own library Orchestre-JS, which already manages the tree of audio nodes. But it also provides ways to plug its output to other nodes. For getting the resulting music of all instruments, I can use the master property on the Orchestre instance.

orchestre.master.connect(analyser);

But I also want to visualize the sound of each instruments individually. This way each tile will have its own animation based on the sound it creates. Each one will thus need its own AnalyzerNode. They can be connected with the handy method connect of Orchestre.

TILE_NAMES.forEach((tile) => {

const analyser = context.createAnalyser();

analyser.fftSize = FFT_SIZE;

const data = new Float32Array(analyser.fftSize);

const multiplier = VOLUME_MULTIPLIER;

sounds[tile] = { analyser, data, multiplier, lastValue: 0 };

orchestre.connect(tile, analyser);

});

Everything is set up. We are now ready to play the music, and read the output.

Reading music data

There are two methods of AnalyzerNode to read the current music data: getByteFrequencyData and getByteTimeDomainData. They return values in Uint8, but you can also obtain results in Float32 with their counterparts getFloatFrequencyData and getFloatTimeDomainData.

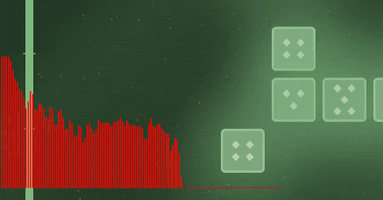

getByteFrequencyData copies the frequency data into a provided Uint8Array (the one we created at the start). This array should have a size of half fftSize (this value can also be obtained with analyzer.frequencyBinCount). In each index, it will write a value between 0 and 255 corresponding to the decibels in a range of frequencies (a "frequency bin"). If you use the float method, you'll have the "accurate" decibel value, which ranges from -Infinity to 0. The higher fftSize is, the larger the array will be, and the thinner each frequency bin will be. To give you an idea of how the data looks like, this method is perfect to create this kind of visuals:

getByteTimeDomainData on the other hand gives the waveform of the audio. Like above, it takes an array and write the result in it. This time, the array should be the size of fftSize. The values are between 0 and 255 (or -1.0 and 1.0 if you use float, which is more convenient). This method is helpful to create this kind of visuals:

So, which one should we use? If we want to animate several elements on different frequencies, getByteFrequencyData is the appropriate one. It requires however to know exactly which ranges of frequencies (in Hz) we want to animate, and what values they have in the music (lower frequencies are usually stronger than higher ones). My needs are simpler: I want the global volume of the sound. For this, getFloatTimeDomainData is much more appropriate. From the waveform boundaries, we can determine how strong the volume is. So by taking the maximum of the absolute values in the wave, we obtain a volume between 0 and 1:

analyser.getFloatTimeDomainData(data);

let peak = 0;

// Find the maximum absolute value in the array

for (const amplitude of data) {

peak = Math.max(Math.abs(amplitude), peak);

}

// For quieter instruments, I use a multiplier to increase the range of their values

const soundLevel = Math.min(1, peak * sound.multiplier);

That being said, I do use getByteFrequencyData for animating the background. The clouds move with low frequencies, and the stars with high frequencies. But this subject is for another time, and the computations are too specific to be relevant anyway.

Updating

As stated, these two methods return the state of the audio at the moment. So to update it in real time, you have to call it every frame, using requestAnimationFrame.

function updateVolume() {

if (!playing) return;

const volume = readVolume();

animateWithAudio(volume);

requestAnimationFrame(() => updateVolume());

}

However, the result will likely be jittery. We are reading raw audio data, it generally has a lot of small variations. To obtain a smoother result, I made a small tween method that ensures that the value don't jump too quickly from a value to another. Also, it's better to use a slower descent than the rise. When the volume gets suddenly high, the animation should react immediately. But when it goes down, it should gently fade out.

let volume = 0;

function tween(from: number, to: number, step: number, stepDown?: number): number {

return to > from ? Math.min(from + step, to) : Math.max(from - (stepDown ?? step), to);

}

const VOLUME_SMOOTH_UP = 0.27;

const VOLUME_SMOOTH_DOWN = 0.015;

function updateVolume() {

if (!playing) return;

volume = tween(volume, readVolume(), VOLUME_SMOOTH_UP, VOLUME_SMOOTH_DOWN);

animateWithAudio(volume);

requestAnimationFrame(() => updateVolume());

}

Animating

Alright, we now have for each instrument a value between 0 and 1 that gets updated with the volume! What should we do with it?

For each tile, I wanted to create some kind of light halo around it. The box-shadow CSS property is the prefect tool for that. So I set this up with some CSS variables:

.halo {

--halo-out-color: white;

--halo-in-color: white;

--shadow-color: rgba(from var(--halo-out-color) r g b / calc(var(--level) * 0.5));

--inner-color: rgba(from var(--halo-in-color) r g b / calc(var(--level) * 0.3));

--shadow-size: calc(var(--tile-size) * 0.2);

--shadow-spread: calc(var(--tile-size) * 0.8);

box-shadow:

0px 0px var(--shadow-spread) var(--shadow-size) var(--shadow-color),

inset 0px 0px var(--tile-size) 10px var(--shadow-color);

border-radius: 10px;

transition: box-shadow 0.05s;

}

The important variable here is --level. It will be there that we will write a value between 0 (no shadow) or 1 (full shadow). The color can also be customized through another property, hence the other variables.

Also, I want the inside of the tile to light up. I can use the same halo component for this:

.halo {

&::after {

pointer-events: none;

content: '';

background-color: var(--inner-color);

position: absolute;

top: 0;

left: 0;

width: 100%;

height: 100%;

border-radius: 10px;

}

}

I use Svelte to build this game. So this last part might not be totally relevant to you (I already simplified a lot of the code above to make it generic). But just to complete the loop, here is how it's done. I acquire the volume value of the instrument from a global store.

const state: TileState = $derived(store.getTileState(tile)); const volume = $derived(state.soundLevel);

That I then pass to the HaloComponent.

<SoundHalo level={volume} {color}>

<TileBtn {...props}>

...

</TileBtn>

</SoundHalo>

And finally in the halo, I use the level props to set the CSS variable!

<div class={['halo', color]} style="--level: {level}">

And like that, we obtain an animation based on the volume of each instrument! A neat visualisation of the music that naturally adapts to it.

Other animations

Reading the music data to display it is the "raw" method to create visuals. But as you can see, it can be quite complex and imprecise. A more basic solution can be to subscribe to the beat of the music. It won't give you as much granularity, but it can allow you to trigger basic animations on the beat.

This is one of the feature provided by Orchestre-JS. The Orchestre instance has a handy addListener method that trigger a regular callback on a given beat interval.

// Every 2 beats

orchestre.addListener(() => animate(), 2, { absolute: true });

// Every second beat in a bar

orchestre.addListener(() => barAnimate(), 4, { absolute: true, offset: 2 });

You can also delay one-off actions with the wait method.

await orchestre.wait(1); // Wait for next beat

await orchestre.wait(4, { absolute: true }); // Wait for next bar

Orchestre-JS is used almost everywhere in Harmonies Garden to synchronise events to the music. And of course, it also allows the game's many instruments to play altogether. Check it out if you're curious!

What about the background though? As mentioned above, the background is animated differently. First because it uses the frequencies data, but also because it is actually a shader! This is another subject that will deserve its own post. Stay tuned!

Starseed Harmonies (beta)

A musical garden holding secrets

| Status | In development |

| Author | Itooh |

More posts

- v0.9.0: Mysterious hints3 days ago

- Devlog 4 - The challenges of composing an explorable song11 days ago

- v0.8.0: So much content!16 days ago

- How Orchestre-JS powers the adaptive music of Starseed Harmonies53 days ago

- v0.7: Beta Release60 days ago

- Devlog 3 - Creating a Vibrant Sky61 days ago

- v0.6: Extra Endings65 days ago

- v0.5: Sound Effects73 days ago

Leave a comment

Log in with itch.io to leave a comment.